Notes on using PostgreSQL

Run SQL query

When using a PostgreSQL connector to run SQL operations (such as SELECT, INSERT, UPDATE, or DELETE), the connector facilitates interaction between your application or tool and the PostgreSQL database.

A sample query to create a table in the Redshift database would be something similar to this: CREATE TABLE customer ( id SERIAL, name VARCHAR(50), email VARCHAR(100), age INT );

For the ease of working with the PostgreSQL queries, refer to the PostreSQL Queries document.

For the ease of working with the PostgreSQL queries, refer to the PostreSQL Queries document.

Insert single record

To Insert a single record operation allows you to insert a single customer record into a PostgreSQL table. Once inserted, you can verify the record by using the Find Rows operation to read the inserted data. The below example demonstrates how you can insert single record into a PostgreSQL table. While working with multiple records use batch insert method. Consider the following JSON payload received by a Webhook, which contains a single customer record:

{

"table": "customer",

"data": [

\{

"id": 1,

"name": "Alice Johnson",

"email": "alice.johnson@example.com",

"age": 35

\}

]

}

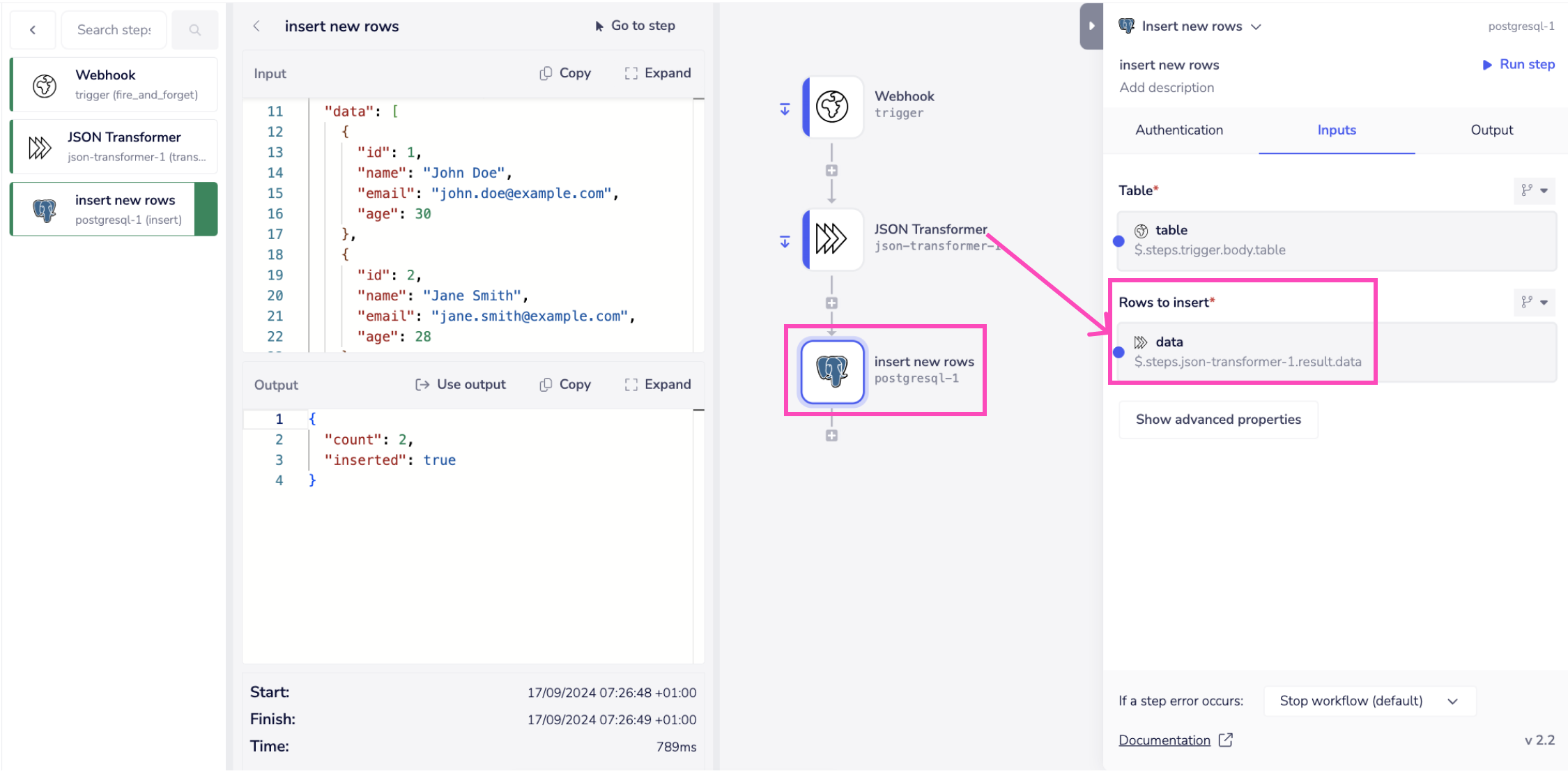

Batch insertion of records

The workflow uses Insert new rows operation to insert multiple records in a table. When inserting records, it's important to ensure that they are formatted correctly according to the table's schema. You can verify the expected format using sample payloads. The workflow is designed to receive and process various types of data through a Webhook. For example, let's consider a scenario where the following JSON payload is received:

{

"table": "customer",

"data": [

{

"id": 1001,

"name": \{

"first": "John",

"last": "Doe"

\},

"contact": \{

"email": "john.doe@example.com",

"phone": "+1-555-123-4567"

\}

},

{

"id": 1002,

"name": \{

"first": "Jane",

"last": "Smith"

\},

"contact": \{

"email": "jane.smith@example.com",

"phone": "+1-555-987-6543"

\}

}

]

}

To properly format this data for insertion into the PostgreSQL table, we used the JSON Transformer connector. Alternatively, you can achieve the same transformation using a script or Python connector. The following JSONata script is applied to structure the data as required for PostgreSQL:

{

"table": "customers",

"data": $map(data, function($customer, $index) {

\{

"id": $index + 1,

"name": $customer.name.first & " " & $customer.name.last,

"email": $customer.contact.email,

"age": $customer.id = 1001 ? 30 : 28

\}

})

}

This transformation prepares the customer data by extracting key fields like id, name, email, and age from the incoming JSON payload.

Once the data has been transformed, it is passed to the PostgreSQL connector to be inserted into the customers table.